Learn the new-gen Research Skills that initiate projects in 3D Perception, Occupancy Networks, and End-To-End Learning for self-driving cars.

This course is currently closed and will open in March 2026. Make sure to join the waitlist to be notified while you wait and receive goodies.

Self-Driving Car Engineer who trained over 10,000 Self-Driving Car Engineers will show you...

Dear friend, if you are currently learning a lot about autonomous driving, but still feel a bit "disconnected" with the state of the art, then this page will show you not only how to connect better, but how to appear like a complete field insider.

Here's the story:

A few months ago, one of my clients booked a call with me to ask me a pressing question:

"I've mastered all the core skills we talk about. 3D Object Detection, Sensor Fusion, Tracking, ROS, you name it. This allowed me to get a job in self-driving cars, but as I'm here on my first weeks, I can't help but feel a disconnection with other engineers in the company. It's like even though I spent all this time learning the core skills used in self-driving cars, I'm never really able to become one of them. Is it because I'm a self-taught? How can I fix it?"

I understood that feeling quite well well. It's like when you've learned english but still struggle with native english speakers. You understand eachother, but they have a way of speaking you just don't possess.

If you are like my client, you may wonder some questions, such as:

"Are my skills good enough?"

"What's next for me to learn? Where does my journey go?"

"Am I learning the right skills?"

Back when I tried to answer him, I came with a long explanation that later became a concept I want to introduce here:

When learning self-driving cars, most engineers go straight for what we could call "core skills". These are the kind that get you through the door. They're the algorithms for 3D Object Detection, the Sensor Fusion techniques that fuxe pixels with point clouds, or the Localization methods that spot your car on the map. These are Python, C++, and ROS. Nearly all job descriptions are full of these "core skills".

But then, there's another set of skills. The ones that don't make it to the job description headlines but make all the difference once you're in. These are skills engineers wouldn't even think of listing, but that they still use every day.

And there are many of them. Examples? Think Sensor Calibration, Model Deployment, Multi-Task Learning, Deep Learning Optimization or HD Map Creation.

All these skills aren't really "learnable" unless you work in the industry... unless you're already "in". Sometimes, you will even see Stealth Skills becoming core skills, like Neural Optimization in the past few years.

And if, like the engineer who contacted me, you want to feel more connected, more aware, but also appear more knowledgable and ultimately look like an insider, you will want to master Stealth Skills.

There is no shortcut to stealth skills. A stealth skill is spotted while listening to interviews, talking to engineers, or while reading research papers. I've found many stealth skills while listening to CVPR conferences, and obviously, while talking with field engineers. It's all very "manual", and it can take you hours just to spot a keyword, that uncover a giant mountain of skills you can learn.

To give you another example, I recently discovered many stealth skills while giving a week-long seminars to Mercedes-Benz on LiDAR, and by being in touch and following the work of field engineers like Holger Caesar, inventor of the "PointPillar" algorithm, or David Silver, Self-Driving Car Engineer at Kodiak, and my first teacher.

So what is the biggest Stealth Skill I discovered recently? It holds in 3 words:

Why?

Well, as I said, look at conferences, read papers, and see how engineers talk. Once you see it, you can't unsee it. Bird Eye View has taken over Autonomous Driving Research. You can see it at every single Tesla AI Day conference, you can see it used in Waymo research papers, and implemented in State of The Art Sensor Fusion algorithms. You can see it used at Wayve in End-To-End Learning, and coupled with Transformers.

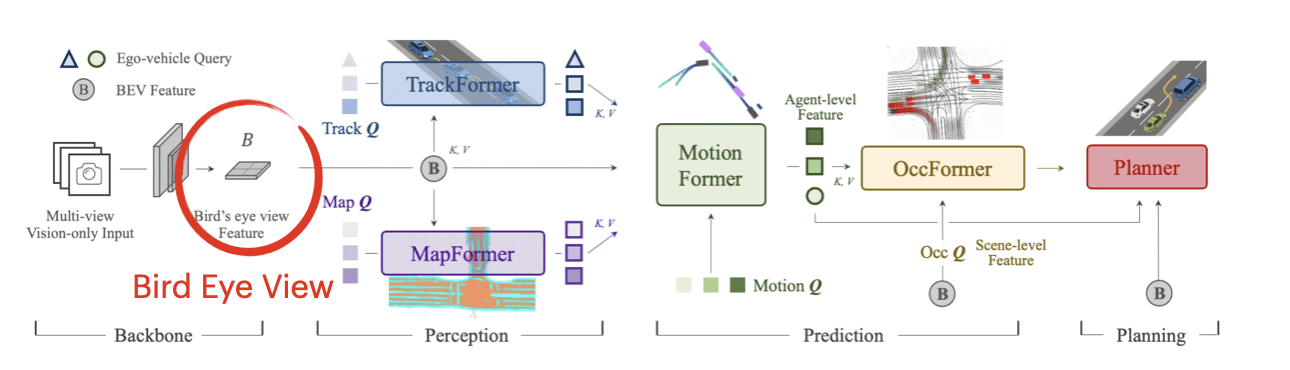

Let's look at the paper that won CVPR 2023: Planning Oriented Autonomous Driving.

This is a paper building an End-To-End autonomous driver. You just input your camera, and the network handles everything. It not only won the "oscar", but also got hundreds of students trying to learn for it.

Yes! It all starts with a Bird-Eye View Conversion!

The Bird Eye View is almost a universal format in self-driving cars, because it can be used for Object Detection & Tracking, for Mapping, for Occupancy Detection, for Motion Detection, and ultimately for Planning. You can, and in most cases should do everything in Bird Eye View.

Today, Bird Eye View is used for 3D object detection, HD Mapping, 3D-2D Sensor Fusion, Occupancy Prediction, Flow Prediction, Occlusion Reasoning, Voxelization, Motion Planning, and virtually every task you need in autonomous driving.

Don't take my word for it, look at the winning research papers, and the approaches presented by self-driving car startups.

And if you pay attention, you will start seeing Bird Eye View everywhere...

The quick answer is: it's a stealth skill. Many engineers just don't know they can learn it. And in fact, no platform offers a course on it, because they focus not only on "core-skills", but also on "mass-market" skills. There is no way to specialise, and to really go advanced.

When I first started to learn about Bird Eye View, I was working on an OpenCV lane detection project. The Bird Eye View algorithm was a bit slow, completely done with traditional approaches, all very manual, erroneous, and a bit random.

But today, we can retrieve a Bird Eye View with Deep Learning, using Spatial Transformers, CNNs, Attention Mechanisms, but also Depth Maps, Geometry, and we can even fuse Bird Eye View from multiple cameras into a single, unified representation.

All of this not only takes time to learn, but it's also technically very difficult. Which is why, I created a roadmap for Engineers who want to learn about Bird Eye View.

Introducing...

Learn how to build Bird Eye View Maps and turn them into inputs for Localization, Mapping, and Planning algorithms.

MODULE 1

In this first module, you'll learn how to get a Bird Eye View from any input. We'll visit traditional approaches to build Bird Eye Views on top of camera images.

What you'll learn:

The real reason engineers use Bird-Eye View in Computer Vision (other than being handy, BEV solves massive problems related to camera only solutions, and LiDAR/Camera conversion formats, more in the introduction)

How to solve the Depth Inconsistency Problem with Bird-Eye View & Bilinear Interpolation (in an image, nearby objects are represented by hundreds of pixels, but far away objects are only shown by 2 or 3 pixels -- this creates high imprecisions at long range, and makes Computer Vision solutions impossible to trust, unless you use this BEV trick)

An almost unknown way to use Depth Maps to build Bird-Eye View images (hint: many engineers use Depth Maps to find depth, but when you switch some axis and do some conversions, you can effectively recover a Bird Eye View)

The secrets of Feature Lifting: A brand new discovery which can not only create a Bird Eye View, but also "export" your 2D feature maps to the 3D space (we'll see code to create 3D frustum, project feature pixels to the 3D space, and recreate entire Bird Eye View just from images)

The 9 only camera parameters you need to build Bird-Eye View from Geometry, and the mathematics behind it

A thrilling overview of all the "Geometric" approaches for Bird Eye View projections, including the Inverse Perspective Mapping algorithm.

First 3 Workshops: Engineer a Bird-Eye-View from 3 Different Ways, including hardcore 3D geometric approaches, as well as Depth Approaches.

If we pause here for a minute, this project is supposed to have anyone crying in pain -- but we made it easy to understand, so that you not only understand how projective geometry works for Bird Eye View, but you also build practical skills you can re-use when doing any kind of projection!

The first time I learned about Bird Eye View, it was through these orthodox, well established, and accepted traditional approaches. Almost a decade later, these approaches are still used, and even integrated in Deep Neural Networks.

In module 2, we'll move on to the Deep Learning part, and see how to do Bird Eye View with Deep Learning.

MODULE 2

Once you know how Traditional Bird Eye View works, we'll move to the Deep Learning side -- you'll study Spatial Transformer Networks, Temporal Transformers, and Multi-View fusion to build robust Bird Eye View features.

What you'll learn:

BEVFormer: An In-Depth look at the most powerful 3D Object Detector, and how it uses Bird Eye View Grids to map objects into a common representation.

How to combine Geometry and Deep Learning in algorithms like Spatial Transformers to make algorithms converge 100x faster (hint: using geometry will act like using a pre-trained model: traditional geometry techniques are okay, but Deep Learning acts as a "refiner" in our case)

The 3 Core Blocks of a Spatial Transformer Network, and a step-by-step guide on how to turn Features into Bird Eye View features (in this part, we'll actually visualize all the features ourselves, at each step of the network, to understand when the features are fused, and how much they're transformed)

A look at some Pure Neural Net approaches to estimate Bird Eye View without depth maps or geometric parameters (yes, this is possible, but has one major drawback, more detail on the summary of this module)

Practical Secrets to compress datasets from hundreds of Gb to a few Mb (it works for almost all image based datasets, and we'll use one-hot encoding and decoding for segmentation tasks).

How to train a Bird Eye View Network, including the loss function you should use, and the hyperparameters suitable for this kind of transformation.

🎁 BONUS: My PyTorch 101 Cookbook explaining the 4 most important things you should know about PyTorch, and telling you in 5-minutes how to read any PyTorch code.

The really juicy secret to combine multiple cameras into a single "top down" view of the world (Note: we will see how some approaches, like simple concatenation, can help with high overlap, but how some others, such as Transformer Networks, are better suited when cameras are not mounted on the same vehicle, for example for surveillance)

Let's pause a minute here.

Do you see this last bullet point? It has been asked to me COUNTLESS times by engineers and companies in literally every industry. From retailers who want to fuse different security cameras, to autonomous shuttle companies who surround their vehicles with cameras and want to "stitch" them.

It's actually a problem I was exposed to by Mercedes-Benz team while giving a seminar to them in India; and their solution was again... Bird-Eye View. This is why you'll learn to use Bird-Eye Concatenators to fuse features together, but also other approaches like Transformer Networks and Temporal Fusion.

Finally:

🚀 DEEP BIRD EYE VIEW PROJECT: Build and Train the Multicam Fusion Network that does Bird Eye View Visualization & Semantic Segmentation.

This "capstone" project will be very important, not only because it will give you something very powerful to show off to recruiters and on LinkedIn, but also because it will "unleash" a complete different power within you: turning features into Bird Eye Views.

The final project has nothing to envy to these, in fact, you'll learn to architect a complete solution for Bird Eye View Estimation and Semantic Segmentation in Self-Driving Cars.

Like this:

MODULE 3

In this second module, you'll learn about the use of Bird Eye View in Lane Detection, HD Mapping, Motion Planning, Object Detection, Large Language Models, and more... In this module, we will BEGIN with a Bird-Eye View image as input, and then explore our options to make cars autonomous.

You will learn:

Tesla's Occupancy Flow Prediction Explained: How to plan a trajectory once you have an "Occupancy Flow" (I talked a lot about Tesla's Occupancy Networks in my emails and blog, but I stayed on the flow prediction. In this part, we'll see how to use Occupancy Flows to predict a trajectory)

Wayve's "FIERY" algorithm, fieryously analyzed; and the 5-step roadmap to how to estimate probability distribution on Bird Eye Views.

A look at Planning Oriented Autonomous Driving: The CVPR 2023 Winner doing End-To-End Autonomous Driving using Bird Eye View Estimation as a starting point for Mapping, Object Detection & Tracking, Occlusion Detection, Motion Planning, and more...

How to turn USELESS drivable area segmentation algorithms into mandatory HD Maps using a Bird Eye Views (incidentally, we'll also study the core components of an HD Map, the types of formats used, and get an introduction to mapping in autonomous driving)

Why Bird Eye View is the best choice for Deep Sensor Fusion, and the secrets of "BEV Fusion", the most popular Sensor Fusion algorithm.

How to Plan, Perceive, and Predict in a Bird Eye View space, and the 5 Key Steps you should implement to build motion planners.

Why End-To-End Autonomous Driving mostly happens in Bird Eye View spaces, and my opinion on End-To-End for the future of self-driving cars

3D LaneNets: A look at the traditional and Deep Learning based approaches for lane line detection using Bird Eye Views (you will see an implementation of the most coded Lane Line Detection algorithm, as well as one for a 3D Lane Line Detector)

What the Dataset of a Line Line Detection Algorithm looks like, (and the 3 types of Deep Learning based Lane Line Detectors you can implement on Bird Eye Views)

How to run Object Detectors on top of a Bird Eye View image, and the best "heads" used for 3D Object Detection

What are Orthographic Feature Transforms and how they are relevant in the field of Object Detection

The never told "gun to the head" story of how I was LEGALLY FORCED to build a Bird Eye View system to avoid my startup from becoming criminal (There are some cases where safety measures demands you to provide context, and where Bird Eye View is practically the only solution allowed, I'll reveal the details I can share in this module)

and many more...

In this last module, we'll go Practical, and you WILL have a lot of work. In fact, each of the 5 categories I mentioned (Sensor Fusion, Object Detection, Lane Detection, Planning, Mapping) will have not only explanations on the latest papers, but also "exploratory assignments" for you.

For example:

One of the mapping assignments is to download autonomous driving HD Maps (that I provide), load them on a Mapping software I used in the field, and then see a code to convert Bird Eye Views into similar HD Maps. This way, you not only know how to build a Bird Eye View, but you also know the "end goal", which is to convert that view into a map.

And we will do similar assignments, some very short, and some quite long, for all topics. These assignments will not all be mandatory, but you could easily spend an entire month just on each of them if you choose to.

This is the key benefit of this Module 3, we will see a complete range of applications from Bird Eye View; from sensor fusion to planning, to HD Mapping, and even to End-To-End Learning...

While you may be seduced by the keywords and videos I provided, you may also have questions to determine whether you should join or not. So let me try and answer some of them:

The goal of this course is to teach you how to build and use Bird Eye View in self-driving cars and other robot Perception algorithms. After the course, you will be familiar with the traditional, and Deep Learning approaches to build Bird Eye Views, and you will know how companies in the field use Bird Eye View Perception.

Yes, to lifetime access.

As for the prerequisites and duration, the course lasts around 5 hours (but can become weeks if you do the exploratory projects), and requires you to:

Already be familiar with Python (Object Oriented Programming is enough), and fundamental Deep Learning (2D CNNs, PyTorch, ...) and Maths (matrix multiplications, rotations & translations, ...)

Ideal: You already know what an Auto-Encoder is, and how to build segmentation approaches

Ideal: You know the secrets of camera calibration, from intrinsic to extrinsic parameters.

✅ If you're currently looking to increase your skills massively in the field of autonomous driving, and trying to position yourself as an expert, then the course is typically for you.

❌ On the other hand, if you're too beginner, or aren't ready to acquire "intermediate" skills, you might not be a good fit. For example, if you enroll without knowing about PyTorch, it's ok, and I provide a PyTorch Cookbook that will help you with that, but you're supposed to show the patience to learn this intermediate step. Same for camera calibration.

Something to get about Bird-Eye View...

Beginner typically don't learn about Bird Eye View.

It's the type of skill they don't really know is a thing. Some can do a few OpenCV tricks, but that stops there. So seeing an engineer doing Spatial Transformer on features and 3D Multi-Cam Fusion is one of a kind.

If you're looking to position yourself as an expert, then Bird Eye View can be a great way to do this, as it has the "built-in" expertise and is usually enough for recruite to put you OUT of the "beginner" category.

"As the pricing is very competitive, I had no particular obstacle purchasing this course.

I found the course extremely valuable as it provided a high-level understanding, references for deeper knowledge, and hands-on experience with the new concepts.

The feature I liked most was the workshops, which offered practical application of the theories discussed. Additionally, the course includes cutting-edge material that is not available anywhere else.

I would absolutely recommend this course. It’s perfect for anyone looking to expand their understanding and gain practical experience with advanced concepts in this field."

Ali S | Bird Eye View Edgeneer

This course is the first ever course on Bird Eye View Perception for autonomous driving.

While many other Computer Vision courses will focus on the "core mass-market" object detection algorithms, this course focuses on an advanced techniques used by researchers.

This is important, because while most enigneers focus on "core" skills, knowing the "Stealth Skills" allow you to specialize as an Autonomous System Engineer and be seen as an insider of the field.

249€ or 2 x 124.50€

Learn the new-gen Research Skills that initiate projects in 3D Perception, Occupancy Networks, and End-To-End Learning for self-driving cars.

This course is currently closed and will open in March 2026. Make sure to join the waitlist to be notified while you wait and receive goodies.