299€ or 2 x 149.00€

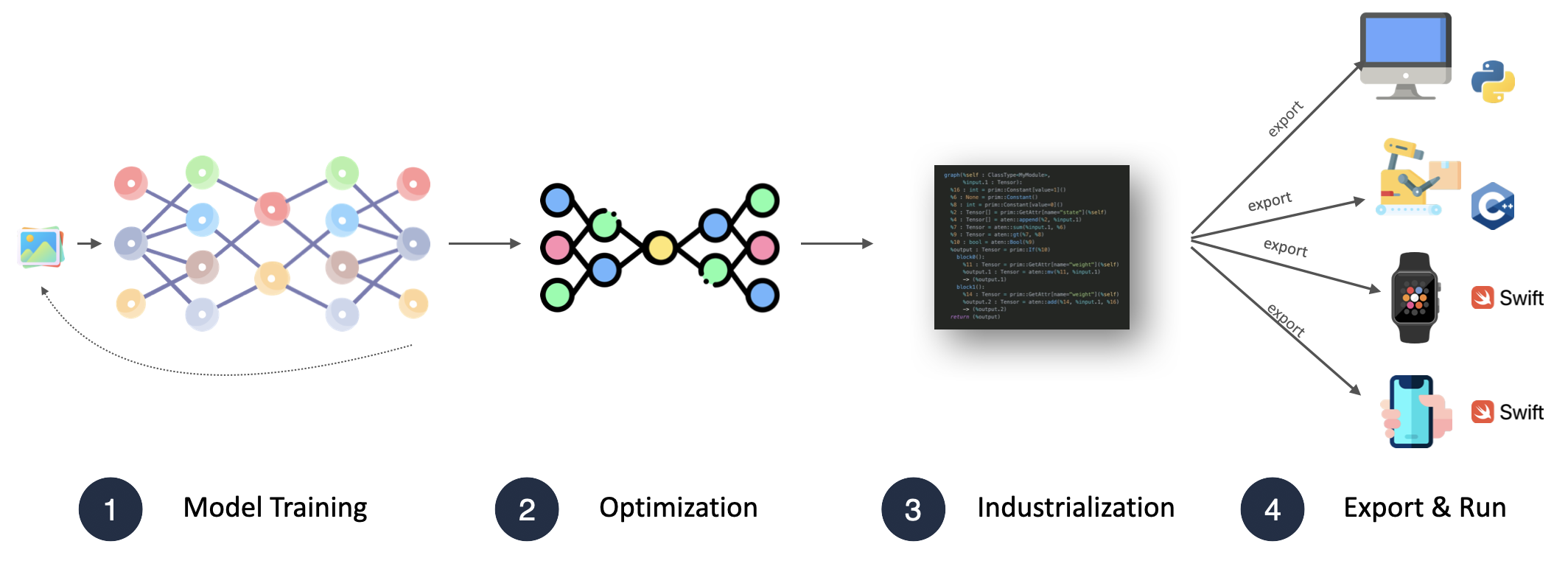

For aspiring Deep Learning Professionals looking to build ready-to-deploy models and make a bigger impact.

.gif)

.gif)

The third course I have taken with Think Autonomous, won't be my last!

This course demystified popular techniques for optimizing models for deployment, and made them more approachable.

Great course!

A great course with thorough and clear explanation! Highly recommended!

Thanks for sharing the content. Am reading again and again.its the most distilled, crystal clear, laser sharp explanation of the topic.

Am still distilling the content word by word. Will go through both courses again (HydraNet and Neural optimization) to beef up my understanding having rearmed.

The inclusion of appendix was excellent move. Such addendum are great companion.keep doing for other courses.

299€ or 2 x 149.00€

For aspiring Deep Learning Professionals looking to build ready-to-deploy models and make a bigger impact.

Lifetime Access to:

The Pro Player Tactics to Optimize Models

The Model Deployment Guide

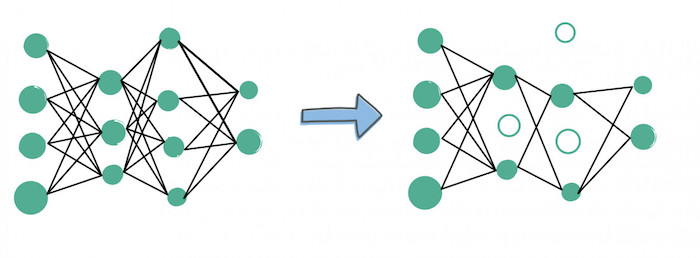

An In-Depth Look at Model Pruning, Knowledge Distillation, and Quantization