249€ or 2 x 125€

An advanced course for engineers who want to master sensor fusion in 2D and 3D.

Introducing...

Designed for future cutting-edge engineers, who have an existing background in image processing, or even point cloud processing, and wish to work on Sensor Fusion technologies.

Let me show you the Program:

ADRIAN ROSEBROCK | CEO, PYIMAGESEARCH

—

249€ or 2 x 125€

Lifetime access to:

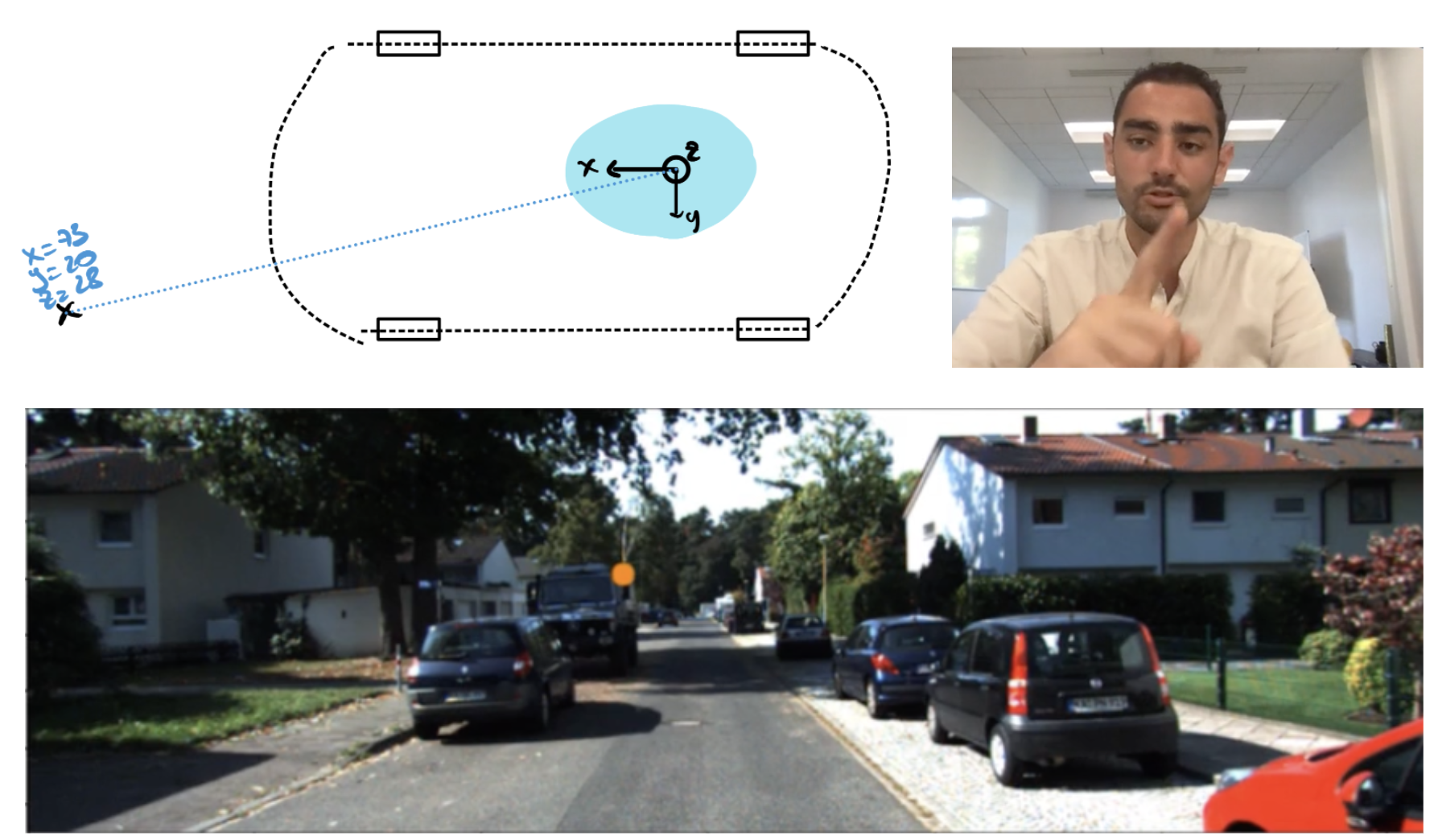

Introduction to Sensor Fusion

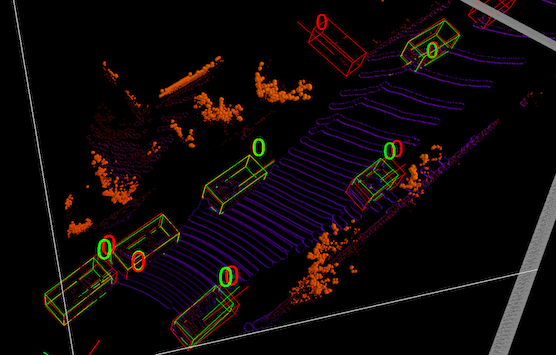

Point Pixel Fusion Approaches

Box To Box Fusion

Plus

The Visual Fusion MindMap that you can look at several months from now to remember everything as if you've just learned it